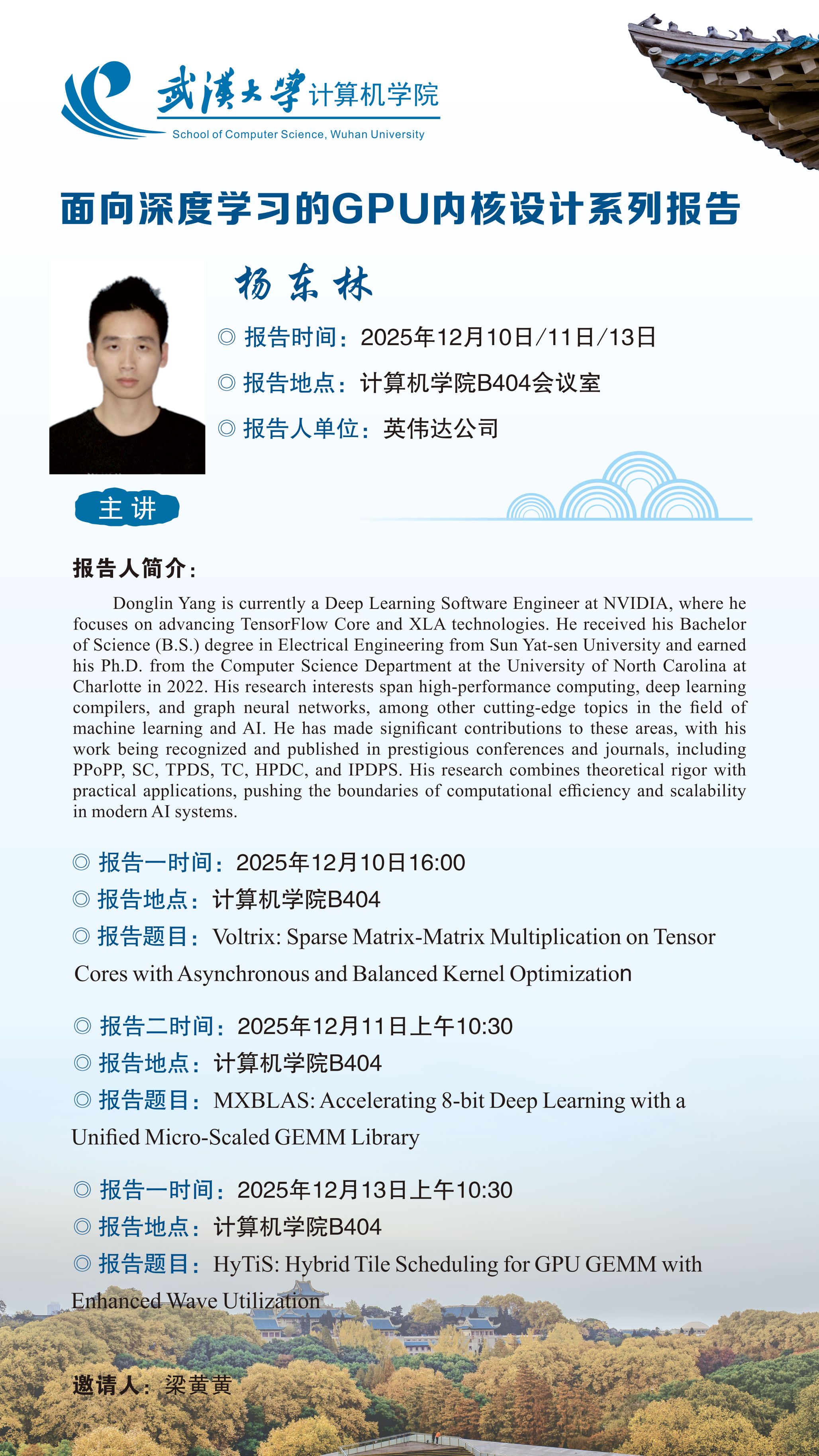

报告人:杨东林

报告人单位:英伟达公司

报告人简介: Donglin Yang is currently a Deep Learning Software Engineer at NVIDIA, where he focuses on advancing TensorFlow Core and XLA technologies. He received his Bachelor of Science (B.S.) degree in Electrical Engineering from Sun Yat-sen University and earned his Ph.D. from the Computer Science Department at the University of North Carolina at Charlotte in 2022. His research interests span high-performance computing, deep learning compilers, and graph neural networks, among other cutting-edge topics in the field of machine learning and AI. He has made significant contributions to these areas, with his work being recognized and published in prestigious conferences and journals, including PPoPP, SC, TPDS, TC, HPDC, and IPDPS. His research combines theoretical rigor with practical applications, pushing the boundaries of computational efficiency and scalability in modern AI systems.

报告一时间:2025年12月10日16:00

报告地点:Avidol

B404

报告题目: Voltrix: Sparse Matrix-Matrix Multiplication on Tensor Cores with Asynchronous and Balanced Kernel Optimization

报告摘要: Sparse Matrix-Matrix Multiplication (SpMM) is crucial in scientific computing and machine learning. Despite advancements in GPU architectures, efficiently leveraging Tensor Cores for SpMM remains challenging. The core issue is the mismatch between the inherently sparse nature of the matrices and the dense computational patterns. Existing methods struggle with substantial overheads in loading data to computation units and cannot adequately manage data imbalance across computations, thereby limiting the high computational throughput potential of Tensor Cores.

In this paper, we introduce Voltrix-SpMM, a revolutionary GPU kernel design that overcomes these challenges. First, we implement an asynchronous data loading pipeline that employs a bit-wise compressed format for sparse matrices and bulk memory copy instructions for dense matrices. This innovative design enables efficient data access and incorporates a warp-specialized producer-consumer model to seamlessly overlap data loading with computation. Second, we develop a persistent and I/O co-balanced kernel mechanism that features a two-stage partition strategy to achieve balance between input and output. Implemented with CUDA 12.6, Voltrix-SpMM substantially improves performance, delivering an average speedups of 36.5x and 1.8x over Tensor Core-based TC-GNN and DTC-SpMM respectively, and an average 1.7x speedup over the CUDA Core-based RoDe, fully unleashing the power of Tensor Cores for SpMM.

报告二时间:2025年12月11日上午10:30

报告地点:Avidol

B404

报告题目: MXBLAS: Accelerating 8-bit Deep Learning with a Unified Micro-Scaled GEMM Library

报告摘要: Micro-scaling General Matrix Multiplication (MX-GEMM), which leverages 8-bit micro-scaling format (MX-format) inputs, represents a significant advancement in accelerating deep learning workloads. However, the diversity of the MX-format space contrasts with current model-oriented implementations, which suffer from rigid problem-kernel coupling, inefficient promotion operations, and unaddressed quantization overhead. In this paper, we present MXBLAS, a high-performance library that unifies support across the full spectrum of MX-format variations. MXBLAS addresses existing limitations through a template-based design for diverse promotion patterns, adaptive runtime kernel generation via guided auto-tuning, and a compute-store co-optimization strategy that fuses quantization into the kernel epilogue. Experimental results demonstrate that MXBLAS outperforms state-of-the-art MX-GEMM libraries by an average of 33%, marking the first realization of the full performance potential of generalized 8-bit computing.

报告三时间:2025年12月13日上午10:30

报告题目: HyTiS: Hybrid Tile Scheduling for GPU GEMM with Enhanced Wave Utilization

报告摘要: As modern GPUs scale in core count and adopt larger tile sizes, the wave quantiza tion problem induced by partially filled waves results in growing hardware underutilization and substantially degraded performance. Existing solutions to this problem often suffer from low execution efficiency or introduce additional synchronization overhead. To address these challenges, we propose HyTiS, a hybrid tile scheduling framework that combines two-level tile scheduling with adaptive tile layout selection. The first level improves throughput by maximizing utilization in full waves, while the second level reduces latency in partial waves through fine-grained tiling. To minimize tuning overhead, HyTiS performs an offline profiling phase to identify throughput- and latency-optimized micro-kernels, forming an efficient runtime search space across diverse tensor workloads. Additionally, we study the impact of tile layouts on L2 cache behavior and introduce an analytical model to select layouts that minimize data movement from global memory to L2 cache at the wave granularity. Extensive evaluations on NVIDIA H100 and A100 GPUs show that HyTiS achieves significant speedups, up to 1.95× and 2.08× over cuBLAS, respectively. Detailed system level analyses further demonstrate the effectiveness of HyTiS in mitigating wave quantization and improving L2 cache affinity.and Cache Locality

邀请人:梁黄黄